Cross perception

The human perceptual apparatus looks for simple explanations for sensory impressions. Which explanation is used for given sensory impressions depends not only on the experiences but also on the expectations of the person. In an immersive video work we create an interplay between the machine and human perception. "Cross Perception" aims to raise the question of how experiences and expectations shape our perception and which perspectives dominate, blur or disappear in this interplay.

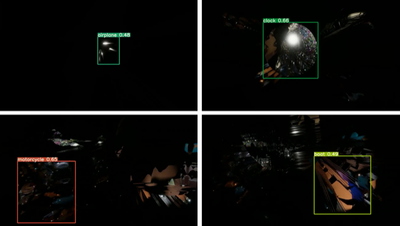

The visual Part is formed by photogrammetry using a smartphone. 3D objects, their mesh structure and textures are faulty and are the basis for the visual structure in Unity. An artificial neural network for object recognition processes the 3d objects and calculates probabilities for the presence of a given set of object classes for each point in time and each image section. If an object is detected over a certain period of time, a sound is played from the direction of the corresponding image section, which is related to the detected object. For the auditory composition, granular synthesis and spectral freezes were used to create a space. The object recognition was translated into Midi Note On/Off commands and imported into Ableton Live to trigger samplers with corresponding sounds. The positions of the sound sources were calculated directly from the camera coordinates and the viewing direction.

The project is part of TRANSFORM, a collaborative project between Angewandte, Johannes Kepler University, and Donau University Krems, funded by the Austrian Federal Ministry of Education, Science and Research. With the involvement of Kathrin Hunze, Thomas Hack; Silvan David Peter, Jan Schlüter (Institute for Computational Perception, JKU Linz) and Christine Böhler, Martin Gasser (Department Cross-Disciplinary Strategies, Angewandte Vienna).

The work was shown at Ars Electronica 2020.

collaborators: